Back to Basics with Visual Feedbacks

/Image courtesy goodfreephotos.com

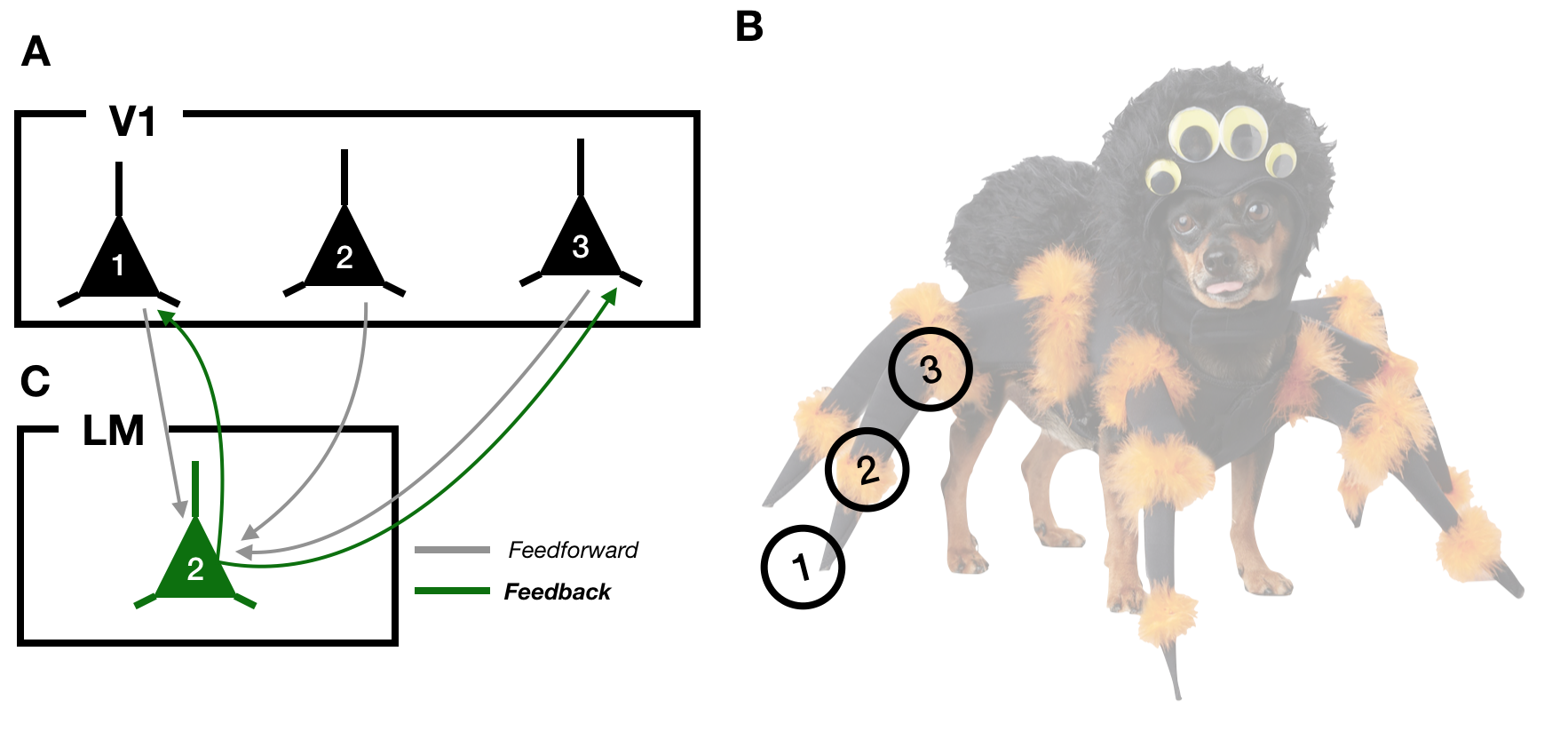

Imagine hearing a rustling in the bushes as you go trick-or-treating in your neighborhood. You spin around to spot a grotesque creature with twelve legs and six eyes staring back at you. A moment later, you realize the monster is actually a dog in a spider costume, hoping for a piece of candy (Diagram 1B). How does your brain deal with this unique visual input? To make sense of our world, light hitting our retinas is processed in stages in the brain. In the mouse brain, visual information is passed from the eyes to primary visual cortex (V1) and onward to other regions, including the lateromedial visual area (LM; Diagram 1A, 1C). While straightforward, this theory of visual processing suffers a nagging complication: there exist abundant reciprocal connections that relay information from the end of the processing pipeline (LM) back to the beginning (V1). These “feedback projections” have been implicated in important cognitive functions, but vision scientists know almost nothing about how feedbacks target cells in V1 and how those connections influence our perception.

Diagram 1: A) A schematic of 3 neurons in primary visual cortex, V1. B) An example visual input, the spider-dog. The circles labeled 1, 2, and 3 correspond to the parts of the image that each neuron in (A) responds to. C) An LM neuron receiving feedforward inputs from V1 (grey lines) and sending feedback projections (green lines) back to a subset of V1 neurons.

To investigate the organization of visual feedbacks, Tiago Marques and his colleagues at the Champalimaud Center for the Unknown studied the mouse visual system (Marques et al., 2018). While there are substantial differences between mouse vision and human vision, feedback projections have been identified in both species and implicated in similar functions, suggesting that the study of mice may help us understand the role of feedbacks in human vision as well.

To understand how the location of feedbacks relates to neural communication, the authors observed neuronal activity in source LM neurons and target V1 neurons simultaneously. By labeling feedback connections from LM to V1 with a fluorescent indicator of neuronal activity, they were able to image the responses of the LM neurons that were connecting back to the earlier portion of the visual processing pipeline while the mice viewed images of bars at different positions and orientations.

Marques focused on two key features of neuronal activity in those areas: retinotopy and orientation selectivity. Retinotopy refers to the tendency for neurons next to each other in the brain to respond to images in adjacent parts of the animal’s visual field. If you’re looking at the spider-dog, for example, the neurons representing the dog’s paw will be near the neurons representing the dog’s leg. Orientation selectivity describes the observation that cells respond most strongly to lines at specific orientations. While the visual system has to deal with scenes as complicated as pets in costumes, probing the response properties of neurons in the visual system with these simpler stimuli allows researchers to understand the building blocks that the brain uses to make sense of more complex images. By showing the mice many lines at different positions on the screen and at different orientations, Marques and his colleagues determined each neuron’s preferred line position and orientation (Figures 1, 2).

The study revealed that although neurons in LM targeted parts of V1 with similar preferred positions (Figure 3), there was considerable variation in the location of their targets. Instead of connecting to the part of V1 that represented exactly the same part of the visual field, LM neurons were connecting to regions of V1 representing parts of the visual field up to 45 visual degrees away: the difference between representing the face or the feet of a person standing one meter in front of you. The authors realized that these discrepancies depended on the orientation preference of the LM neuron sending that projection (Figure 5; Diagram 2). In fact, feedbacks were targeting parts of V1 responsible for encoding parts of visual space perpendicular to their preferred orientation. If an LM neuron preferred lines pointing up and to the right, for example, its feedback connections would target parts of V1 that would be most active when seeing bars pointing up and to the left. While these patterns give clues as to where visual feedbacks are going, understanding their role in altering our perception of the world remains elusive.

Diagram 2: Filtering source LM neurons by orientation preference reveals systematic patterns in the region of V1 they project to. Adapted from Figure 5.

One possibility is that feedbacks from LM are silencing regions in V1 that don’t align with the preferred orientation of the LM neuron. This computation could serve to sharpen our perception by enhancing V1 responses to lines at certain orientations and suppressing others. While future work will be needed to understand the organization of feedback connections in other sensory modalities and species, these findings bring us one step closer to understanding how we perform important visual tasks like distinguishing cute dogs from terrifying spiders.

Edited by Kristin Muench

References:

Marques, Tiago, et al. "The functional organization of cortical feedback inputs to primary visual cortex." Nature neuroscience 21.5 (2018): 757.